A gentle introduction to data analysis in hockey

Some background info to go with the three part masterclass series on statistical analysis from Paris

We asked Simon Blanford to host a three part series of masterclasses on statistical analysis from the women’s games in Paris. On the opening day of the Games we were live with part one of this series with an intro (see ↓ ).

This article below is meant to accompany those live masterclasses to give you some background…

Statistical analysis... in a different way (1/3)

The on demand video from this first masterclass is available for our paid subscribers here on in the XPS app.

Also make sure you mark your calendar for our second masterclass on the analysis of the women’s pool games just ahead of the quarter finals on Monday August 5 at 9am (Paris time).

TLDR

Hockey makes extensive use of video analysis as a coaching tool. However, the level of investigation into the data the game produces lags far behind. Currently hockey uses simple summaries of game data (circle penetrations, shots, penalty corner conversions) without the necessary step of statistically investigating these data to look for differences that may provide insights into how teams play, how players perform and are valued, and about hockey more broadly. The statistical framework already available and the predictive models developed in other sports suggest a promising avenue for broadening hockey’s analytic capacity which in turn has the potential to offer deeper insights into how the game is played and how players are assessed.

Some sports have traditionally been statistically minded. One thinks of baseball or cricket with their almanacs full of batting averages, bowling or pitching efficiency. And it is no real surprise that the most well-known example of player and game analysis has entered the public consciousness via baseball and the Brad Pitt lead film, Moneyball.

Nowadays the use of analysis is not confined to the bat and ball games but includes many sports and goal-oriented invasion games are not immune from this trend. In the last fifteen to twenty years football has gone through a revolution in its attitude to data and analysis such that a former head of research at Liverpool FC, a person who ended up running a six-person analysis team, was not a from football but was formerly a theoretical physicist from Cambridge University. And such is the impact of analysis, the impact of statistical models that are produced to describe, interpret and predict game play, that the nature of the way football is played has changed to accommodate what is suggested from analytic outputs.

What about hockey?

What about our sport then, what about hockey? Well, it would be fair to say that hockey is not at the cutting edge of sports analysis.

A recent summary of studies that have been published on hockey in research journals counted just eight relating to performance analysis (fewer than Aussie rules football) and concluded that only a small handful of these were actually any good. Perhaps this shouldn’t be surprising. Hockey, though global in its reach, is still very much a minority sport. Yet it is a sport that regularly talks, ‘analysis’. What are the commentators going on about when they cite the statistics of the game, the number of times ‘things’ happen, the regular reference to the FIH datahub if this is not analysis? And don’t teams have analysts looking at video, coding out various game-related moments of interest for later discussion among the coaches?

Well, to think so probably conflates the idea of video analysis and statistical analysis when they are really two complementary but rather different techniques. On the data analysis side of things there generally seems some misunderstanding about the difference between data, ‘stats’ and analysis. To clarify this a simple definition of statistical analysis would run something like this:

“Statistical analysis, or statistics, is the process of collecting and analysing data to identify patterns and trends, remove bias and inform decision making.”

There is no doubt that in hockey we do a lot of collecting data, the FIH database mentioned above is full of it. But it is very rare this data is analysed in a way that allows the unbiased identification of patterns that can inform coaching discussions and subsequently, decision making.

What might an analytic process look like?

If that’s the case what might an analytic process look like? First we’d collect and then summarise the data. Let’s say we are interested in a big picture analysis of hockey generally - the number of goals scored in the men’s and women’s game say. We collect a nice large data set (1983 - 2023) and summarise it in a simple table:

And in the form of descriptive figures, here just looking at the average goals per game:

We could leave it here. After all, 4.7 goals is a larger number than 3.6. And this is indeed where much hockey analysis stops. But are 4.7 goals really different to 3.6? How can we tell? Guessing is where the potential for bias can creep in (particularly when differences might be subtle) and therefore informed conclusions hard to come by.

Time to turn to the more formal side of statistical. There are methods of inferential statistics and modelling that can look at and determine the relationships between groups or categories (here the difference between the men’s and women’s goal scoring) ultimately helping us understand whether that goal a game difference is a real and consistent, or one that just happens by chance and cannot be relied upon as a consistent effect.

The process of deciding this is usually done by assuming there is no difference, that the result we see has just happened by chance, and then seeks to determine if this is true. If the analysis indicates that there is less than a 5% chance (in statistical jargon - p < 0.05) that such a result happens by chance, then we can infer there is a real and ’significant difference’. In the example here, a small analysis comparing the number of goals scored between the men’s and women’s games concludes there is only about a 1% (p = 0.01) chance that they are the same, a very clear difference that indicates men score more than the women consistently and repeatably.

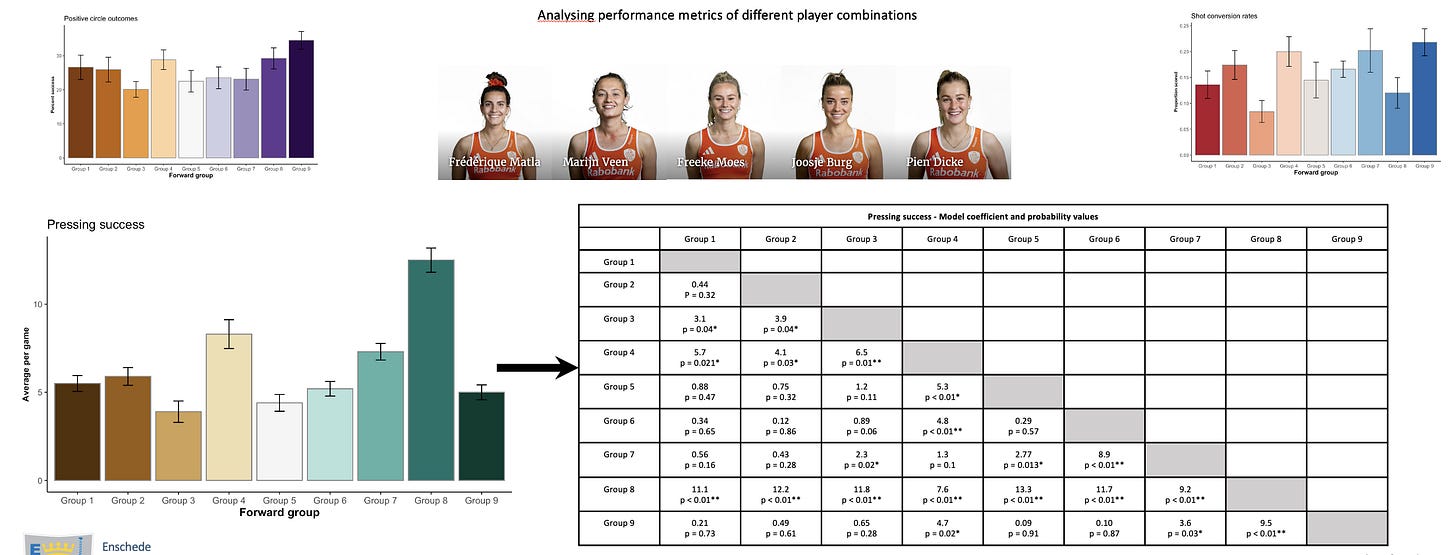

This broad scale example could just as easily be conducted on simple comparisons of the number of circle entries your team makes compared to your opponents, or more complicated analyses seeking to assess the performance of various forward combinations that may be playing at any one time in a match.

Predictive models

Statistical analysis is not restricted to asking about differences. Predictive models can be developed to estimate the probability of an event occurring. The event might be losing possession, or entering the attacking quarter of the pitch, or the circle. It might be whether the goalkeeper is going to make a save or the attacker’s shot is going to score. In fact, any event can be the subject of predictive modelling. These kinds of models are now common in football and other sports and perhaps the best known is the ‘expected goals’ model, or simply the xG model. Such is the impact of this approach since its development about fifteen years ago, that it is used to provide goal scoring estimates for many major television providers of football, the values calculated are often used in journalists reports and regularly debated over a post-match beer by supporters.

The basic point of an xG model is to calculate the probability that a shot will become a goal - the expectation of a goal. To make this calculation a mathematical model is created that is trained on past data to determine which of the many variables that might influence a shot becoming a goal do actually predict a goal. Retaining these variables the model is then used to estimate the probability of subsequent shot data. For example, here are all the shots taken by the Dutch women’s team in the last two Eurohockey tournaments.

The circles are scaled to the probability of each of these shots becoming a goal - the larger the circle, the higher the probability. Outputs like this prompt some interesting questions. Are your players aware of the value of their shot when they take it? Should they be? Would it make a difference if you trained to try and maximise shot values indicated by model output? Football does this already.

Model outputs can also be summarised by game. Below is the cumulated xG values for each chance the Dutch and Belgian women’s teams created in the Eurohockey 2023 final. By the end of the game the total accumulated value represents the xG for each team (2.6 for the Netherlands and 1.3 for the Belgium). Summaries like this can be useful for assessing the shot values created by each team and the pattern of chance creation through the game.

One of the most valuable and commonly used summaries of xG values is to assess how a team is compared to model expectations over several games. Valuable because one game will not capture a trend whereas examining a sequence of five or more games will indicate more clearly whether a team is under- or over-performing model expectations. Here for example, are the Dutch and Belgian teams xG values and the actual number of goals scored during their last two Eurohockey campaigns.

Finally model outputs can be summarised for individual players too. Here Is Frédérique Matla’s scoring performance in comparison to model expectations.

In all the above examples xG estimates are derived from a model developed from shots made in the women’s international game. But the same process can be used to develop models for specific levels of play, specific teams (one each for the Belgians and Dutch for example) or for individual players. A model could be developed for Frédérique Matla from her previous shot and goal data and then used in future to assess how she is doing in comparison to xG estimates specifically tailored to her.

Predictive models don’t just orbit goalscoring but can be developed for many aspects of game play. In football there are models that examine the probabilities of saving a shot, of the pass to the player shooting being a goal scoring assist, of the probability that the next pass will be a turnover, of valuing ball movement actions by the team and individual players. Such models, as with the xG version, take data to develop but can, alongside inferential modelling, ultimately provide deep insights into how the game is played, how your team are performing and whether individual players are meeting, exceeding or underperforming expectations.

This is not an area hockey is examining in any detail currently but based on the rapid uptake and implementation of statistical analysis in other sports perhaps it is an area in which we should take more notice in future.

Three takeaways

1. Hockey does a lot of good video analysis. But the game carries out very little formal analysis of the large amounts of data it collects often deciding instead to look at simple summaries of match data.

2. There is room for more detailed analyses such as inferential tests and models to help determine differences between groups and categories (comparing teams, different times of the game) and predictive models to estimate event probabilities (scoring, entering the circle, defending a corner).

3. The outputs from statistical analysis allow for unbiased and informed discussion and decision making as well as prompting coaching ideas for session design and tactical insights.